Public Betas

The first reviews of the Humane AI Pin have dropped, and they’re, uh, not great.

There is no one failure here; rather, there is a lethal combination of a product that is both poorly thought-out and poorly implemented. Humane’s goal of replacing the smartphone in people’s lives is as ambitious as it is ill-conceived: phones are central to our lives, and they have achieved this position because they are good tools for what we do with them and we like to use them.

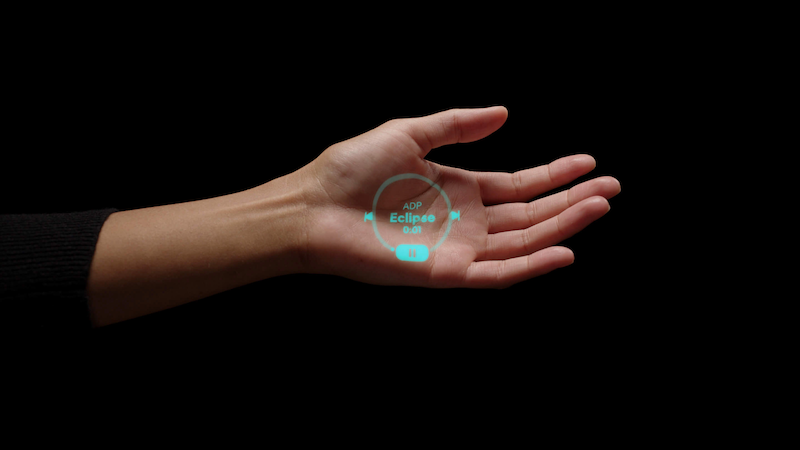

Humane makes a big deal of not making users look at a screen, and instead interacting with the device by touching it, speaking to it, and waving one hand about vaguely in space. This all sounds incredibly awkward, and worse, most of it can be done already with a phone connected to AirPods and Siri.

The one unique benefit that the AI Pin can claim and would be difficult to deliver today is the camera on the user’s lapel that can see what they are holding or looking at. These capabilities are potentially interesting, going back as far as the forehead-mounted camera from Vannevar Bush’s famous essay, As We May Think, where he introduced the Memex and the concept of hypermedia. What is not clear, though, is why this camera needs to be a discrete standalone device. Most of the recognition features rely on offboard servers in the cloud anyway, so why not proxy through a phone? And if there is a phone in the mix, maybe it can run some of the models itself, saving the lag and unreliability that are still features of cloud LLMs in 2024.

Even the more positive reviews recognise these issues, but excuse them on the basis of the device’s future potential. Humane claims that many of the shortcomings will be addressed in forthcoming updates, and anyway, it’s the long-term vision that matters. The AI Pin will (eventually) free us all from our phone dependency — whether we want it to or not.

This hyping of the nebulous future potential of a device whose current incarnation is somewhat disappointing all sounds very familiar. Apple’s Vision Pro suffers from many of the same problems as Humane’s AI Pin: neither one is really a product, or at least, not yet. Both are better considered as technology demonstrators, the founders of lineages which will deliver value at some point in the dim future.

Here is an example of the sort of special pleading that surrounds Vision Pro:

I’m not declaring that the Vision Pro has a special destiny because there’s no way to know that. But I do feel comfortable suggesting that those who are declaring it a dead end and a failed product might want to consider how foolish it would have been to say the same thing about a Commodore PET or TRS-80 in 1977.

Since we’re referring back to the dawn of the personal computer, it might be worth reviewing the Osborne effect. Humane and Apple both need to sell the devices that they have available now — although Apple can stand to lose money on speculative notions for quite a bit longer than Humane can.

In the case of Apple, this story sounds very familiar. I am reading Inventing the Future right now, about the history of Apple’s Advanced Technology Group, and just came to this passage:

Future Apple Ill Product Manager David Fradin recalled:

“About 100,000 Macs were purchased in the first year, mostly by developers and early adopters. The developers figured out they could not write robust software with only 10K available. Early adopters got frustrated because there was not much software for their $2,000 soon-to-be toy.”

Apple’s Retail Marketing Manager John Scull recalls for CM:

“…for the first little bit, people were just buying it because it’s cool. But then to go beyond the early adopters and you’re now starting to get into people that have to justify why they’re doing it, all of a sudden it needed to be able to do things.”

“People were just buying it because it’s cool”. That certainly sounds like both the Vision Pro and the AI Pin. They both represent potentially cool notions, just ones that are not fully realised in the here-and-now.

The Vision Pro has been described as being two years ahead of its time in terms of technology. This is also why it costs so much more than other contemporary VR goggle devices. Horace Dediu notes as much in his review:

After using the product for a few weeks my observation is that the development of the Vision Pro appears to have been an engineering-led heavy lift rather than a design-led puzzle solving.

I’ve written about this same phenomenon myself, where someone comes up with some tech that is undeniably cool, but is lacking a reason for people to spend their precious time and money.

The issue Apple faced with that original Mac, and is facing again with the Vision Pro, is that superlative technology is not enough to win. Users need something to do with their cool new device, and that means content. The problem is that content development is precisely the bottleneck here: Apple hasn’t done it, and neither have third-party developers. Apple has of course not been doing itself any favours with its developer community lately, but 3D in general, and VR specifically, has an intrinsic problem of being terrifyingly expensive and time-consuming to produce, as opposed to “flat” content.

The only explanation for Apple’s Vision Pro strategy that makes sense to me is that the current iteration is best seen as a developer beta, not an actual public release. Apple is getting it into people’s hands, in the hope that they will build the content that will get actual users on board, and from that convergence, some compelling use case will emerge. The first part of this strategy worked with the Watch, after all: Apple thought it could be useful for all sorts of things, but the users have spoken, and it is primarily a health tracker and secondarily a notification system for the phone.

Humane has a different problem: they were too opinionated in taking on the phone. A device like the AI Pin but that operated in conjunction with the phone would be a lot more compelling to users. Such a simplified device would be able to avoid many of the issues that bedevil the actual AI Pin by offloading those features to the phone, which is demonstrably very good at them already, instead of wasting scarce development resources in trying to reinvent the wheel in ways that do not help users.

One part of the AI Pin user experience that many reviewers identified as promising is facing something or holding something and asking the device to tell you about it or do something with it — give you the nutritional information of a foodstuff, make a booking at a restaurant, that sort of thing. But to me, the greatest squandered potential is not this “what am I holding” scenario. In particular, I can see that failing in the same way as Amazon’s ‘Just Walk Out’ tech has: by requiring an army of human labellers to be on hand for anything outside its experience. If Amazon failed in the relatively controlled situation of its own store, where all the inventory is known by definition, what chance does Humane have to cover the entire world? Humans are constantly finding new things to look at — it’s literally one of our favourite activities — so the device would never be able to catch up.

No, the biggest miss is the gestures — and once again, there is a Vision Pro connection here. If AR/VR goggles like the Vision Pro ever take off in a big way, we will need better input modes than we have today. By all accounts, the finger taps work well enough as action inputs, but looking at something to focus it — and losing focus if you look away — is something I have heard many negative reports of.

The AI Pin has an input system that sounds interesting, if not fully developed, where users move their hands through space as if manipulating control devices directly. Something like this is going to be required if we are ever to give inputs in VR more complex than mouse clicks.

Or I could just get my phone out and tap something out there.

🖼️ Vannevar Bush picture from Wikimedia, Vision Pro picture from Apple web site, AI Pin navigation from Humane web site.