Artificial Effluent

Artificial Effluent

A lot of the Discourse around ChatGPT has focused on the question of “what if it works?”. As is often the case with technology, though, it’s at least as important to ask the question of “what if it doesn’t work — but people use it anyway?”.

ChatGPT has a failure mode where it “hallucinates” things that do not exist. Here are just a few examples of things it made up from whole cloth: links on websites, entire academic papers, software for download, and a phone lookup service. These “hallucinations” are nothing like the sorts of hallucinations that a human might experience, perhaps after eating some particularly exciting cheese, or maybe a handful of mushrooms. Instead, these fabrications are inherent in the nature of the language models as stochastic parrots: they don’t actually have any conception of the nature of the reality they appear to describe. They are simply producing coherent text which resembles text they have seen before. If this process results in superficially plausible-seeming descriptions of things that do not exist and have never existed, that is a problem for the user.

Of course that user may be trying to generate fictional descriptions, but with the goal of passsing off ChatGPT’s creations as their own. Unfortunately “democratising the means of production” in this way triggers a race to the bottom, to the point that the sheer volume of AI-generated submissions spam forced venerable SF publisher Clarkesworld to shut down — temporarily, one hopes. None of the submitted material seems to have been any good, but all of it had to be opened and dealt with. And it’s not just Clarkesworld being spammed with low-quality submissions, either: it’s endemic:

The people doing this by and large don’t have any real concept of how to tell a story, and neither do any kind of A.I. You don’t have to finish the first sentence to know it’s not going to be a readable story.

Even now while the AI-generated submissions are very obvious, the process of weeding them out still takes time, and the problem will only get worse as newer generations of the models are able to produce more prima facie convincing fakes.

The question of whether AI-produced fiction that is indistinguishable from human-created fiction is still ipso facto bad is somewhat interesting philosophically, but that is not what is going on here: the purported authors of these pieces are not disclosing that they are at best “prompt engineers”, or glorified “ideas guys”. They want the kudos of being recognised as authors, without any of the hard work:

the people submitting chatbot-generated stories appeared to be spamming magazines that pay for fiction.

I might still quibble with the need for a story-writing bot when actual human writers are struggling to keep a roof overhead, but we are as yet some way from the point where the two can be mistaken for each other. The people submitting AI-generated fiction to these journals are pure grifters, hoping to turn a quick buck from a few minutes’ work in ChatGPT, and taking space and money from actual authors in the process.1

Ted Chiang made an important prediction in his widely-circulated blurry JPEGs article:

But I’m going to make a prediction: when assembling the vast amount of text used to train GPT-4, the people at OpenAI will have made every effort to exclude material generated by ChatGPT or any other large language model. If this turns out to be the case, it will serve as unintentional confirmation that the analogy between large language models and lossy compression is useful. Repeatedly resaving a jpeg creates more compression artifacts, because more information is lost every time.

This is indeed going to be a problem for GPT-4, -5, -6, and so on: where will they find a pool of data that is not polluted with the effluent of their predecessors? And yes, I know OpenAI is supposedly working on ways to detect their own output, but we all know that is just going to be a game of cat and mouse, with new methods of detection always trailing the new methods of evasion and obfuscation.

To be sure, there are many legitimate uses for this technology. The key to most of them is that there is a moment for review by a competent and motivated human built in to the process. The real failure for all of the examples above is not that the language model made something up that might or perhaps even should exist; that’s built in. The problem is that human users were taken in by its authoritative tone and acted on the faulty information.

My concern is specifically that, in the post-ChatGPT rush for everyone to show that they are doing something — anything — with AI, doors will be opened to all sorts of negative consequences. These could be active abuses, such as impersonation, or passive ones, omitting safeguards that would prevent users from being taken in by machine hallucinations.

Both of these cases are abusive, and unlike purely technical shortcomings, it is far from being a given that these abuse vectors will be addressed at all, let alone simply by the inexorable march of technological progress. Indeed, one suspects that to the creators of ChatGPT, a successful submission to a fiction journal would be seen as a win, rather than the indictment of their entire model that it is. And that is the real problem: it is still far from clear what the endgame is for the creators of this technology, nor what (or whom) they might be willing to sacrifice along the way.

***

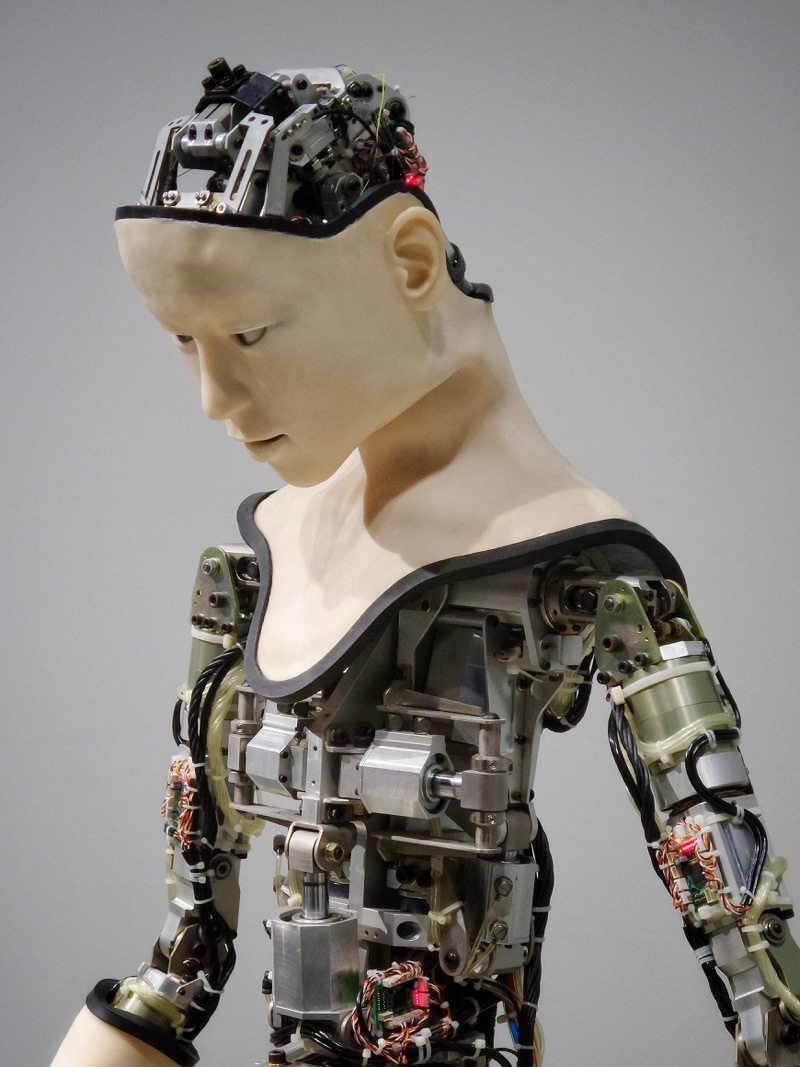

🖼️ Photo by Possessed Photography on Unsplash

-

It’s probably inevitable that LLM-produced fiction will appear sooner rather than later. My money is on the big corporate-owned shared universes. Who will care if the next Star Wars tie-in novel is written by a bot? As long as it is consistent with canon and doesn’t include too many women or minorities, most fans will be just fine with a couple of hundred pages of extruded fiction product. ↩